Loading...

Your agent is in production. Users are sending requests. The agent is responding. Everything looks fine.

Then a user complains that the agent gave them completely wrong advice. You open your monitoring dashboard. Request received. Response sent. 200 OK. Latency: 3.2 seconds.

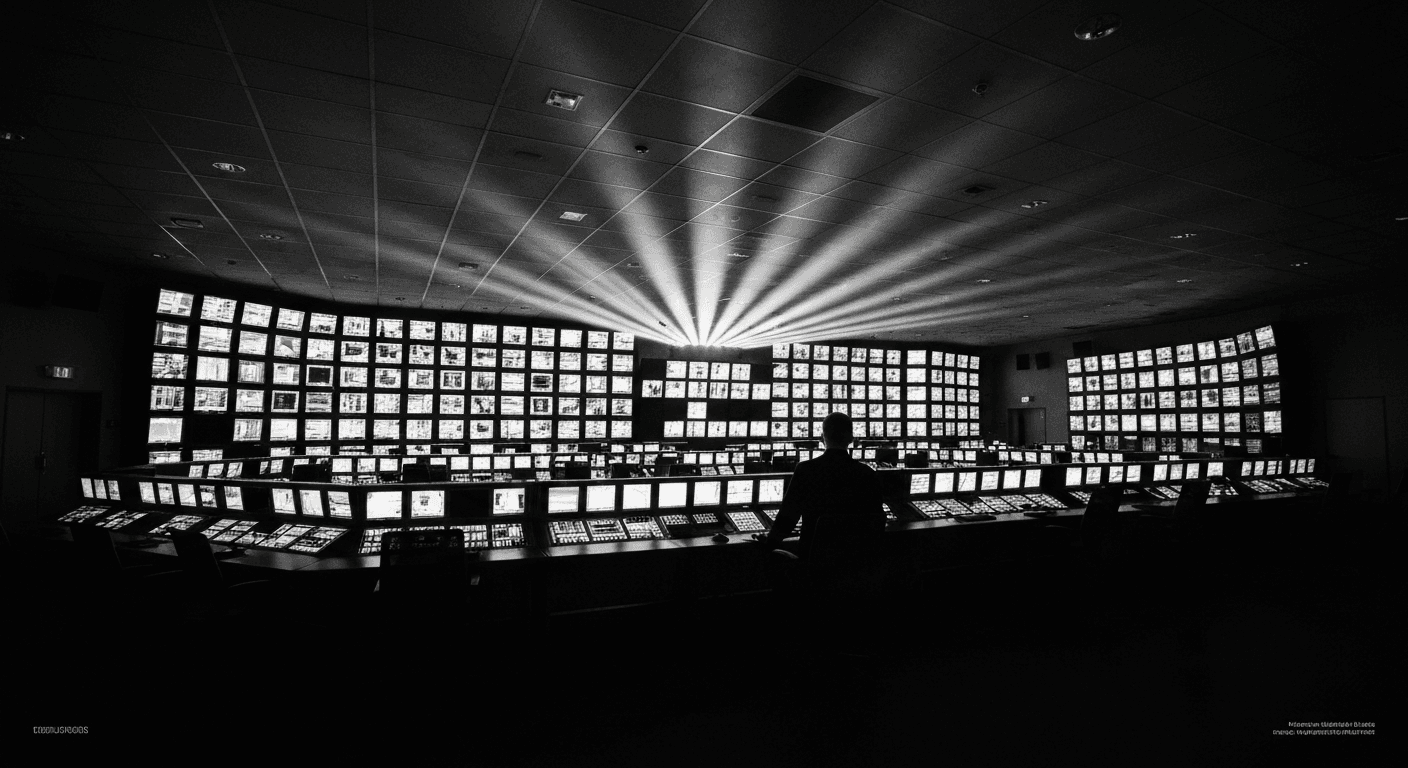

None of that tells you anything useful. You have no idea what the agent "thought." What information it considered. What alternatives it weighed. Why it produced the output it did.

This is the observability gap that kills agent systems in production. You can see that things happened, but you cannot see why.

Why Traditional Monitoring Fails

Traditional application monitoring was designed for deterministic systems. The same input produces the same output. If something goes wrong, you check the logs, find the error, and fix the code path.

Agent systems are non-deterministic. The same input might produce different outputs on different runs. There is no "code path" to fix because the agent's behavior emerges from the interaction between its instructions, the model's reasoning, and the specific context of each request. Traditional monitoring gives you the "what" but completely misses the "why."

You need a new monitoring paradigm. One that captures not just inputs and outputs, but the entire reasoning chain between them.

Distributed Tracing for Agent Workflows

The foundation of agent observability is distributed tracing adapted for AI workflows.

A trace starts when a request arrives and ends when the response is sent. Between those points, every significant step gets a span. The orchestrator deciding which agent to invoke. The agent constructing its prompt. The LLM call with its full input and output. Each tool call with parameters and results. The agent processing tool results and deciding next steps. The final response construction.

Each span is annotated with metadata. Duration. Token count. Cost. Model used. Tool called. And critically, a quality assessment. Was this step successful? Did the output meet expectations?

This gives you a complete, hierarchical view of every agent execution. When something goes wrong, you can drill from the top-level request down through each step to find exactly where the problem occurred and why.

Quality Metrics That Actually Matter

Standard metrics like latency, throughput, and error rate tell you about system health. They tell you nothing about agent quality. You need domain-specific quality metrics.

Decision accuracy. For agents that make decisions or recommendations, what percentage of decisions are correct? This requires either human evaluation of sampled decisions or automated evaluation using an LLM judge. Track this over time. Establish a baseline. Alert when it drops.

Response relevance. Does the agent's response actually address what the user asked? A response can be factually correct but completely irrelevant. Measure relevance through user feedback signals (did they ask a follow-up? did they rephrase? did they abandon the conversation?) and automated evaluation.

Factual consistency. Does the agent contradict itself? Does it contradict the provided context? Does it state things that are verifiably wrong? These are hallucination metrics, and they are critical for any agent that users rely on for information.

Task completion rate. When users engage the agent to accomplish something, how often do they actually accomplish it? This is the ultimate quality metric. Everything else is a proxy for this.

User satisfaction. Explicit feedback (thumbs up/down, ratings) and implicit feedback (session duration, return rate, escalation to human support). These lag behind other metrics but provide the ground truth on whether your agent is actually helping.

Track each metric per agent, per model version, per task type, and per time period. The aggregates tell you the overall story. The breakdowns tell you where to focus.

The Reasoning Log

This is the piece most teams miss, and it is the most important one.

Every agent execution should produce a reasoning log. Not just the input and output. The full chain of thought. What context the agent retrieved. What it decided to do and why. What tools it called and how it interpreted the results. What alternatives it considered and rejected.

Store these reasoning logs alongside your traces. When a user reports a bad response, you pull the reasoning log and see exactly what went wrong. Was the context retrieval off? Was the reasoning sound but the context incomplete? Did the agent misinterpret a tool result? Each failure mode has a different fix, and the reasoning log tells you which one you are dealing with.

This is also invaluable for improving your agent over time. Reviewing reasoning logs for correct responses shows you what good looks like. Reviewing logs for incorrect responses shows you the failure patterns. These patterns inform prompt improvements, tool refinements, and architectural changes.

Alerting Without Noise

Agent systems produce a lot of data. Without careful alerting design, you drown in notifications and start ignoring them.

Threshold-based alerts catch acute problems. Quality drops below a hard floor. Error rate spikes above a ceiling. Token consumption exceeds budget. These should fire rarely and always indicate a real problem.

Trend-based alerts catch gradual degradation. Quality declining 1% per week does not trigger a threshold alert. But over a month, it is a 4% degradation that nobody noticed. Compute rolling averages and alert when the trend is consistently negative.

Anomaly-based alerts catch the unexpected. A sudden change in the distribution of tool calls. An unusual pattern in response lengths. A shift in the types of requests being made. These might indicate a problem, a change in user behavior, or an attack. They warrant investigation.

Route alerts to the right people. Quality alerts go to the ML team. Infrastructure alerts go to the ops team. Security anomalies go to the security team. A single alert channel for everything guarantees that everything gets ignored.

Building the Observability Stack

You do not need to build everything from scratch. The observability stack for agent systems combines existing tools with agent-specific additions.

Tracing. Use OpenTelemetry as the foundation. Add custom instrumentation for LLM calls, tool executions, and reasoning steps. Several libraries now provide auto-instrumentation for popular LLM APIs.

Metrics. Use Prometheus or your existing metrics platform. Define custom metrics for quality, cost, and agent-specific measurements. Build dashboards in Grafana or your preferred visualization tool.

Logging. Store reasoning logs in a structured format. Elasticsearch or a similar system works well for search and analysis. Retention policies should keep at least 30 days of full logs for debugging.

Evaluation. Build or adopt an evaluation pipeline that samples production interactions and scores them against your quality criteria. This is the agent-specific piece that does not exist in standard monitoring stacks.

The investment in observability pays off exponentially. Without it, you are flying blind. With it, you can diagnose problems in minutes instead of days, track improvements with confidence, and build the data foundation for systematic agent quality improvement.

Related Articles

Monitoring AI-Driven Applications: What to Track and Why

Comprehensive monitoring strategies for applications built with AI agents — from error tracking to performance metrics and cost optimization.

Agent Deployment Patterns for Production Environments

Deploy AI agents reliably with patterns for scaling, versioning, monitoring, and zero-downtime updates in production systems.

The Future of AI Agents: What Comes After 2026

Where AI agent technology is heading — from persistent agents to multi-modal systems, agent economies, and the emergence of AI-native organizations.

Want to Implement This?

Stop reading about AI and start building with it. Book a free discovery call and see how AI agents can accelerate your business.